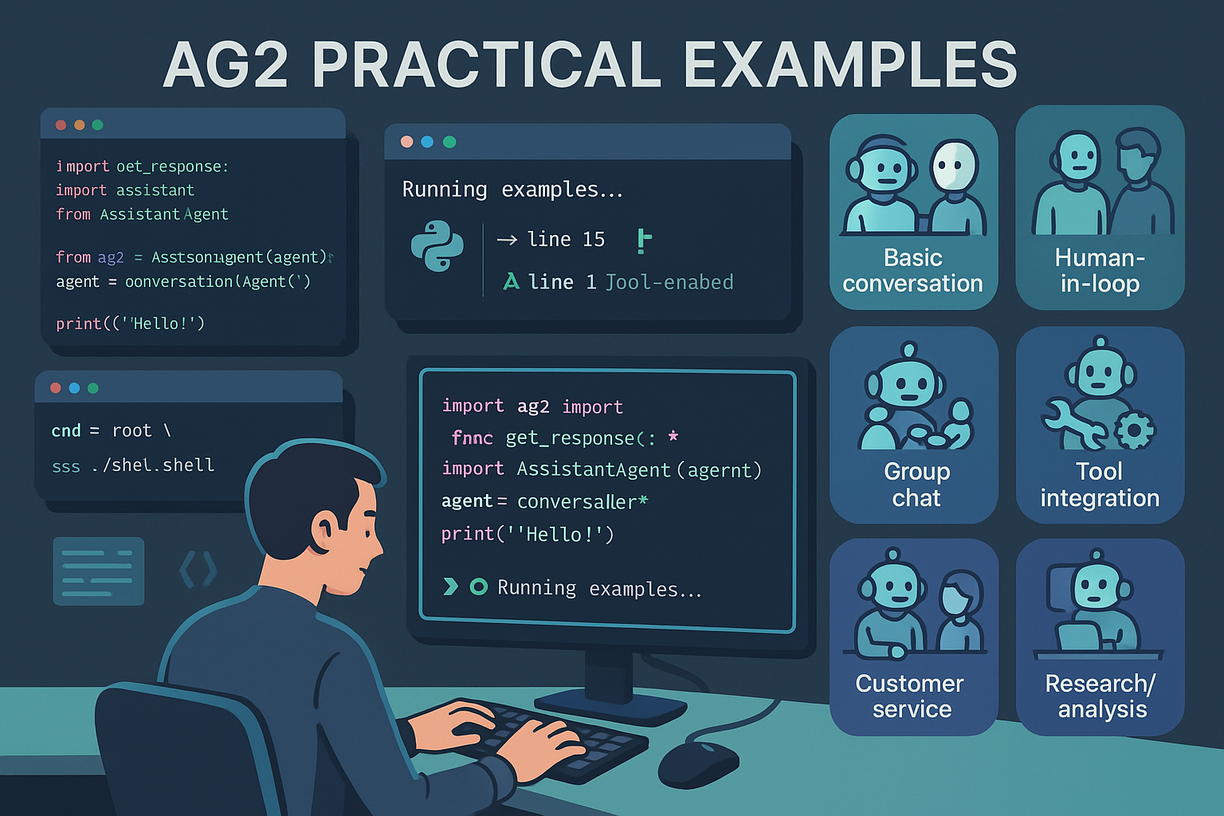

AG2 Practical Exercises

0 comments

This document contains hands-on exercises designed to reinforce the concepts covered in the AG2 course. Each exercise builds upon previous knowledge and provides practical experience with different aspects of the framework.

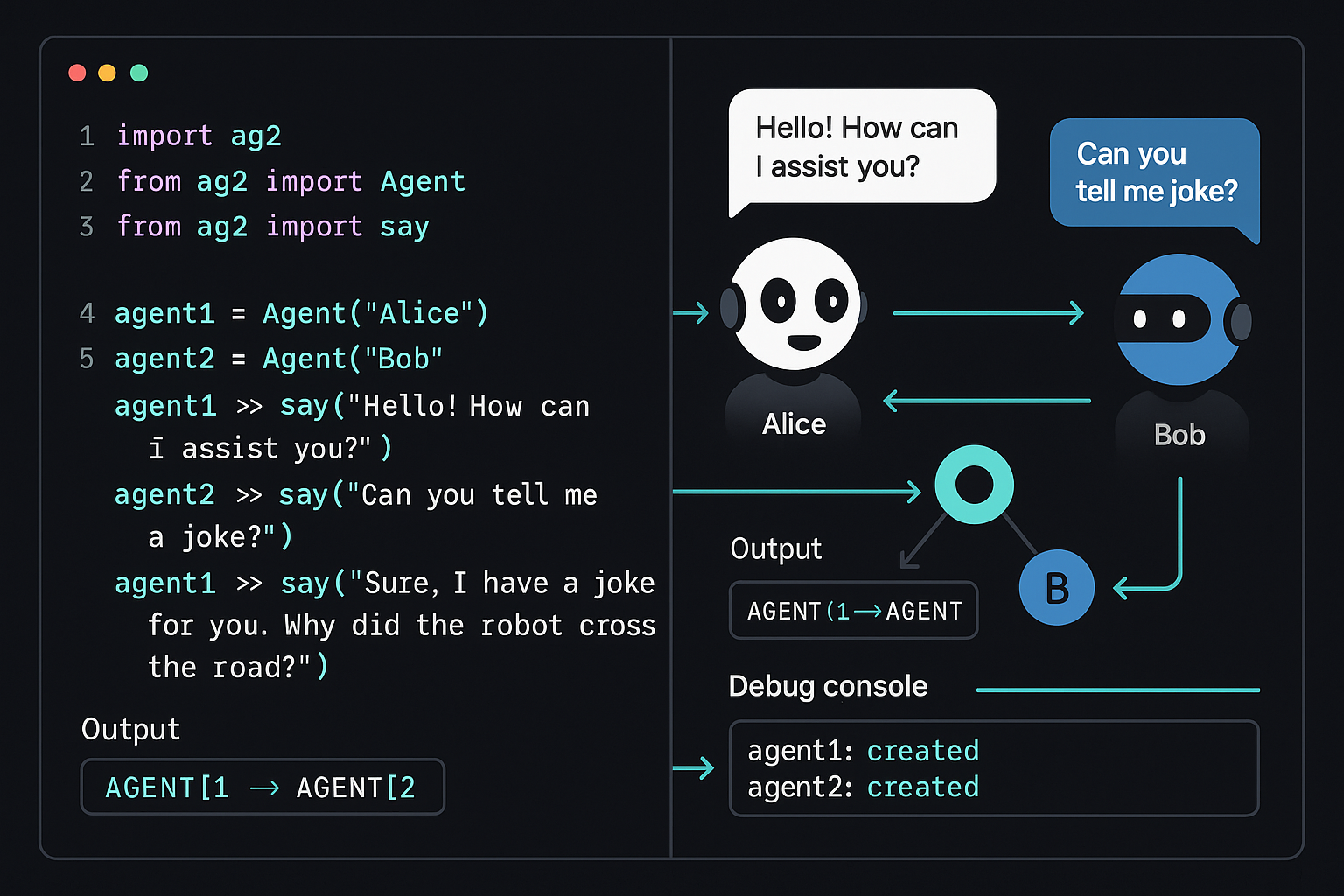

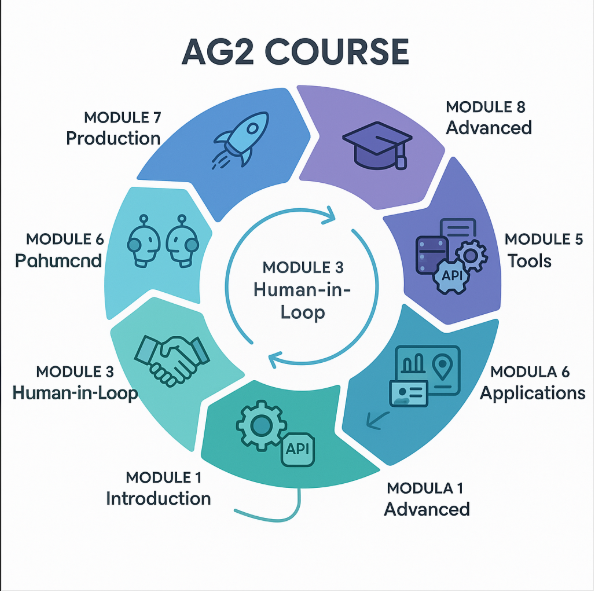

Exercise 1: Your First AG2 Agent

Objective: Create a basic conversable agent and understand fundamental concepts.

Instructions:

- Set up your development environment with AG2 installed

- Create a simple conversable agent that can answer questions about a specific topic

- Test the agent with various questions

- Experiment with different system messages and observe behavior changes

Starter Code:

from autogen import ConversableAgent, LLMConfig

# TODO: Configure your LLM

llm_config = LLMConfig(api_type="openai", model="gpt-4o-mini")

with llm_config:

# TODO: Create your agent with an appropriate system message

agent = ConversableAgent(

name="your_agent",

system_message="Your system message here",

)

# TODO: Test your agent

# agent.generate_reply(messages=[{"role": "user", "content": "Your question here"}])

Expected Outcome: A working agent that can respond to questions in character.

Exercise 2: Human-in-the-Loop Decision Making

Objective: Implement a workflow that requires human approval for certain actions.

Instructions:

- Create an agent that can make recommendations

- Implement human approval for high-impact decisions

- Test both automatic approval and human intervention scenarios

- Add error handling for invalid human input

Starter Code:

from autogen import ConversableAgent, UserProxyAgent, LLMConfig

llm_config = LLMConfig(api_type="openai", model="gpt-4o-mini")

with llm_config:

# TODO: Create a recommendation agent

recommender = ConversableAgent(

name="recommender",

system_message="You make recommendations and ask for approval when needed.",

)

# TODO: Create a human proxy agent

human_proxy = UserProxyAgent(

name="human_proxy",

human_input_mode="TERMINATE", # Modify as needed

code_execution_config={"work_dir": "temp", "use_docker": False}

)

# TODO: Implement the approval workflow

Expected Outcome: A system that can operate autonomously but seeks human approval for important decisions.

Exercise 3: Multi-Agent Collaboration

Objective: Build a team of agents that work together to solve a complex problem.

Instructions:

- Create at least 3 specialized agents (e.g., researcher, analyst, writer)

- Implement a GroupChat to coordinate their collaboration

- Define clear roles and responsibilities for each agent

- Test the system with a complex task that requires all agents

Starter Code:

from autogen import ConversableAgent, GroupChat, GroupChatManager, LLMConfig

llm_config = LLMConfig(api_type="openai", model="gpt-4o-mini")

with llm_config:

# TODO: Create specialized agents

agent1 = ConversableAgent(

name="agent1",

system_message="Your role description",

description="Brief description for group chat"

)

# TODO: Add more agents

# TODO: Create coordinator agent with termination condition

coordinator = ConversableAgent(

name="coordinator",

system_message="Coordinate the team and decide when task is complete",

is_termination_msg=lambda x: "COMPLETE" in (x.get("content", "") or "").upper(),

)

# TODO: Set up GroupChat and GroupChatManager

# TODO: Initiate collaboration on a complex task

Expected Outcome: A functioning team of agents that can collaborate effectively on multi-step tasks.

Exercise 4: Tool Integration

Objective: Create agents that can use external tools to enhance their capabilities.

Instructions:

- Define at least 2 custom tools (functions) that agents can use

- Register these tools with appropriate agents

- Create a workflow where agents use tools to solve problems

- Implement proper error handling for tool failures

Starter Code:

from typing import Annotated

from autogen import ConversableAgent, register_function, LLMConfig

# TODO: Define your custom tools

def your_tool(parameter: Annotated[str, "Parameter description"]) -> str:

"""Tool description for the agent."""

# TODO: Implement your tool logic

return "Tool result"

llm_config = LLMConfig(api_type="openai", model="gpt-4o-mini")

with llm_config:

# TODO: Create tool-using agent

tool_agent = ConversableAgent(

name="tool_agent",

system_message="You can use tools to solve problems.",

)

# TODO: Create executor agent

executor = ConversableAgent(

name="executor",

human_input_mode="NEVER",

)

# TODO: Register your tools

register_function(

your_tool,

caller=tool_agent,

executor=executor,

description="Tool description"

)

# TODO: Test tool usage

Expected Outcome: Agents that can effectively use external tools to solve problems they couldn't handle with LLM capabilities alone.

Exercise 5: Customer Support System

Objective: Build a complete customer support automation system.

Instructions:

- Create agents for different support functions (triage, technical, billing)

- Implement routing logic based on customer inquiry type

- Add escalation procedures for complex issues

- Include quality assurance and follow-up mechanisms

Requirements:

- Handle at least 3 different types of customer inquiries

- Implement proper escalation to human agents when needed

- Maintain conversation context throughout the support process

- Generate summary reports of support interactions

Expected Outcome: A working customer support system that can handle common inquiries automatically while escalating complex issues appropriately.

Exercise 6: Research Assistant

Objective: Create a research assistant that can gather, analyze, and synthesize information.

Instructions:

- Build agents for information gathering, analysis, and report writing

- Implement fact-checking and validation processes

- Create structured output formats for research results

- Add capabilities for handling multiple research topics simultaneously

Requirements:

- Gather information from multiple sources (simulated)

- Perform analysis and identify key insights

- Generate well-structured research reports

- Include citations and source validation

Expected Outcome: A research assistant system that can conduct comprehensive research and produce high-quality reports.

Exercise 7: Creative Content Generation

Objective: Build a creative team that can generate and refine content collaboratively.

Instructions:

- Create agents for ideation, writing, editing, and review

- Implement iterative refinement processes

- Add style and quality guidelines

- Include feedback incorporation mechanisms

Requirements:

- Generate creative content ideas

- Produce initial drafts based on ideas

- Implement editing and refinement cycles

- Ensure final output meets quality standards

Expected Outcome: A creative content generation system that can produce high-quality content through collaborative agent interaction.

Exercise 8: Production Deployment

Objective: Deploy an AG2 application in a production-like environment.

Instructions:

- Choose one of your previous exercises to deploy

- Implement proper logging and monitoring

- Add error handling and recovery mechanisms

- Create deployment documentation and procedures

Requirements:

- Containerize your application

- Implement health checks and monitoring

- Add configuration management

- Include backup and recovery procedures

- Document deployment and operational procedures

Expected Outcome: A production-ready AG2 application with proper operational support.

Capstone Project: Multi-Agent Business Application

Objective: Create a comprehensive business application using AG2 that demonstrates mastery of all course concepts.

Project Options:

- E-commerce Management System: Order processing, inventory management, customer service

- Content Marketing Platform: Content planning, creation, review, and publication

- Project Management Assistant: Task planning, resource allocation, progress tracking

- Educational Platform: Course creation, student assessment, personalized learning

Requirements:

- Use at least 5 different agents with specialized roles

- Implement human-in-the-loop workflows where appropriate

- Include tool integration for external services

- Add comprehensive error handling and recovery

- Implement monitoring and logging

- Create user documentation and deployment guides

- Include testing and quality assurance procedures

Deliverables:

- Complete working application

- Architecture documentation

- User manual

- Deployment guide

- Testing documentation

- Presentation of your solution

Evaluation Criteria:

- Functionality and completeness

- Code quality and organization

- Documentation quality

- Innovation and creativity

- Practical utility and usability

Additional Practice Challenges

Challenge 1: Agent Personality Development

Create agents with distinct personalities that affect their communication style and decision-making patterns.

Challenge 2: Dynamic Agent Creation

Build a system that can create new agents dynamically based on changing requirements.

Challenge 3: Multi-Language Support

Implement agents that can communicate in multiple languages and translate between them.

Challenge 4: Performance Optimization

Optimize an existing multi-agent system for better performance and resource utilization.

Challenge 5: Security Implementation

Add comprehensive security features to protect sensitive data and prevent unauthorized access.

Submission Guidelines

For each exercise:

- Submit complete, working code

- Include a README with setup and usage instructions

- Provide test cases and example outputs

- Document any assumptions or limitations

- Include a brief reflection on what you learned

Getting Help

- Review the course materials and examples

- Check the official AG2 documentation

- Join the AG2 Discord community for support

- Participate in study groups and peer discussions

Assessment Rubric

Excellent (90-100%):

- Code works perfectly and handles edge cases

- Clean, well-documented, and maintainable code

- Creative solutions that go beyond basic requirements

- Comprehensive testing and error handling

Good (80-89%):

- Code works correctly for most scenarios

- Generally well-structured with adequate documentation

- Meets all basic requirements

- Some testing and error handling

Satisfactory (70-79%):

- Code works for basic scenarios

- Meets minimum requirements

- Basic documentation provided

- Limited testing

Needs Improvement (Below 70%):

- Code has significant issues or doesn't work

- Missing key requirements

- Poor documentation or structure

- No testing or error handling

Remember: The goal is to learn and practice. Don't hesitate to experiment, make mistakes, and iterate on your solutions!

Comments