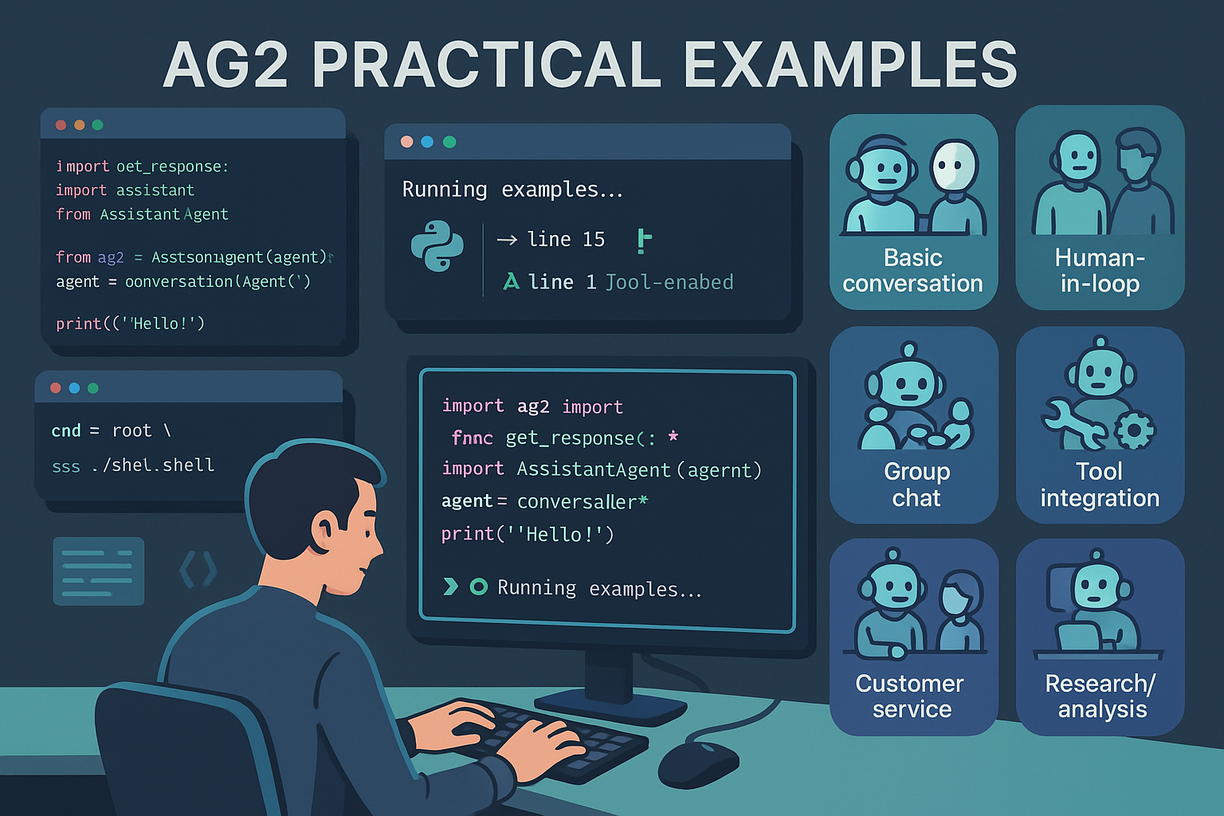

AG2 Practical Examples

0 comments

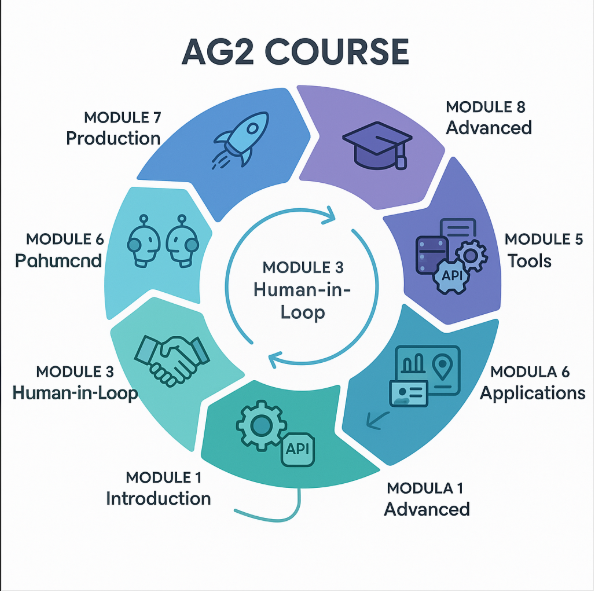

This file contains practical code examples for learning AG2 development.

Each example demonstrates key concepts and patterns covered in the course.

Requirements:

- Python >= 3.9, < 3.14

- ag2[openai] package

- OpenAI API key set as OPENAI_API_KEY environment variable

Author: Manus AI

Version: 1.0

"""

import os

from typing import Annotated

from datetime import datetime

from autogen import ConversableAgent, UserProxyAgent, GroupChat, GroupChatManager, LLMConfig, register_function

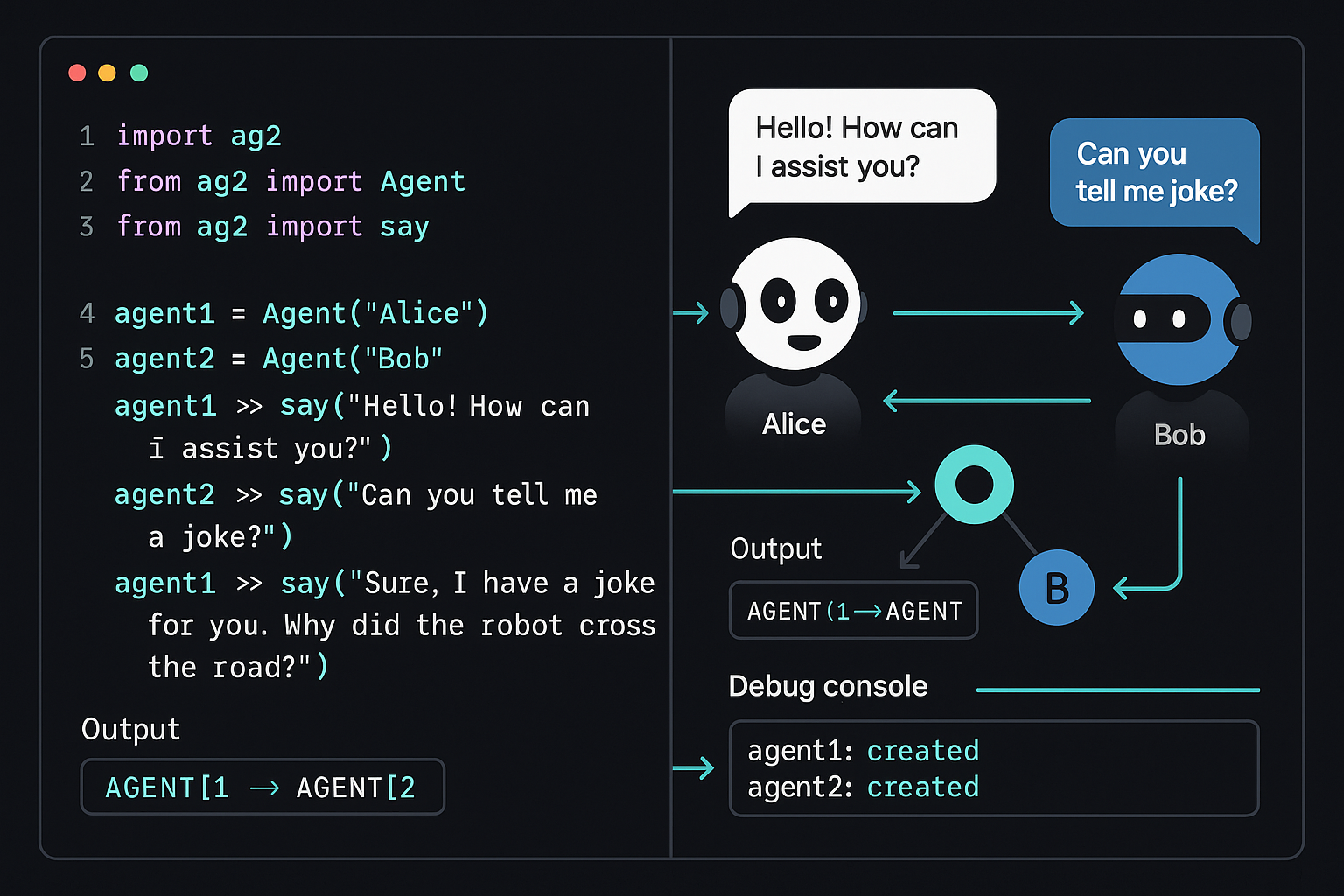

Example 1: Basic Two-Agent Conversation

def example_1_basic_conversation():

"""

Example 1: Basic Two-Agent Conversation

This example demonstrates the fundamental concept of two agents

communicating with each other using AG2.

"""

print("=== Example 1: Basic Two-Agent Conversation ===")

# Configure LLM

llm_config = LLMConfig(api_type="openai", model="gpt-4o-mini")

with llm_config:

# Create two conversable agents

assistant = ConversableAgent(

name="assistant",

system_message="You are a helpful assistant that provides concise, accurate information.",

)

fact_checker = ConversableAgent(

name="fact_checker",

system_message="You are a fact-checking assistant that verifies information accuracy.",

)

# Start the conversation

result = assistant.initiate_chat(

recipient=fact_checker,

message="What is AG2 and how does it differ from the original AutoGen?",

max_turns=3

)

print("Conversation completed!")

return result

Example 2: Human-in-the-Loop Workflow

def example_2_human_in_loop():

"""

Example 2: Human-in-the-Loop Workflow

This example shows how to integrate human oversight into agent workflows.

"""

print("=== Example 2: Human-in-the-Loop Workflow ===")

llm_config = LLMConfig(api_type="openai", model="gpt-4o-mini")

with llm_config:

assistant = ConversableAgent(

name="assistant",

system_message="You are a helpful assistant for data analysis tasks.",

)

# Create a human proxy agent

human_proxy = UserProxyAgent(

name="human_proxy",

human_input_mode="ALWAYS", # Requires human input for every response

code_execution_config={"work_dir": "coding", "use_docker": False}

)

# Start the chat with human involvement

result = human_proxy.initiate_chat(

recipient=assistant,

message="Help me analyze some sales data. What steps should we take?",

max_turns=2

)

return result

Example 3: Group Chat with Multiple Agents

def example_3_group_chat():

"""

Example 3: Group Chat with Multiple Agents

This example demonstrates multi-agent collaboration using GroupChat.

"""

print("=== Example 3: Group Chat with Multiple Agents ===")

llm_config = LLMConfig(api_type="openai", model="gpt-4o-mini")

with llm_config:

# Create specialized agents

researcher = ConversableAgent(

name="researcher",

system_message="You are a research specialist who gathers and analyzes information.",

description="Gathers and analyzes information on given topics."

)

writer = ConversableAgent(

name="writer",

system_message="You are a content writer who creates clear, engaging content.",

description="Creates clear, engaging written content based on research."

)

reviewer = ConversableAgent(

name="reviewer",

system_message="You are a content reviewer who ensures quality and accuracy.",

description="Reviews content for quality, accuracy, and clarity."

)

coordinator = ConversableAgent(

name="coordinator",

system_message="You coordinate the team and make final decisions. Say 'DONE!' when satisfied.",

is_termination_msg=lambda x: "DONE!" in (x.get("content", "") or "").upper(),

)

# Create group chat

group_chat = GroupChat(

agents=[coordinator, researcher, writer, reviewer],

speaker_selection_method="auto",

messages=[],

)

# Create group chat manager

manager = GroupChatManager(

name="group_manager",

groupchat=group_chat,

llm_config=llm_config,

)

# Start group discussion

result = coordinator.initiate_chat(

recipient=manager,

message="Let's create a brief guide about the benefits of multi-agent AI systems.",

)

return result

Example 4: Tool Integration

def example_4_tool_integration():

"""

Example 4: Tool Integration

This example shows how to integrate external tools with AG2 agents.

"""

print("=== Example 4: Tool Integration ===")

# Define a custom tool

def get_current_time(timezone: Annotated[str, "Timezone (e.g., 'UTC', 'EST')"]) -> str:

"""Get the current time in the specified timezone."""

# Simplified implementation - in practice, you'd use proper timezone handling

current_time = datetime.now()

return f"Current time in {timezone}: {current_time.strftime('%Y-%m-%d %H:%M:%S')}"

def calculate_age(birth_year: Annotated[int, "Birth year (YYYY)"]) -> str:

"""Calculate age based on birth year."""

current_year = datetime.now().year

age = current_year - birth_year

return f"Age: {age} years old"

llm_config = LLMConfig(api_type="openai", model="gpt-4o-mini")

with llm_config:

# Create agents

tool_agent = ConversableAgent(

name="tool_agent",

system_message="You can use tools to get current time and calculate ages.",

)

executor_agent = ConversableAgent(

name="executor_agent",

human_input_mode="NEVER",

)

# Register tools with agents

register_function(

get_current_time,

caller=tool_agent,

executor=executor_agent,

description="Get current time in specified timezone"

)

register_function(

calculate_age,

caller=tool_agent,

executor=executor_agent,

description="Calculate age based on birth year"

)

# Start conversation with tool usage

result = executor_agent.initiate_chat(

recipient=tool_agent,

message="What's the current time in UTC? Also, if someone was born in 1990, how old are they?",

max_turns=3

)

return result

Example 5: Customer Service Automation

def example_5_customer_service():

"""

Example 5: Customer Service Automation

This example demonstrates a customer service automation system.

"""

print("=== Example 5: Customer Service Automation ===")

llm_config = LLMConfig(api_type="openai", model="gpt-4o-mini")

with llm_config:

# Create specialized customer service agents

triage_agent = ConversableAgent(

name="triage_agent",

system_message="""You are a customer service triage agent.

Categorize customer issues as: TECHNICAL, BILLING, GENERAL, or ESCALATE.

Be helpful and professional.""",

description="Categorizes and routes customer inquiries."

)

technical_agent = ConversableAgent(

name="technical_agent",

system_message="""You are a technical support specialist.

Help customers with technical issues, provide troubleshooting steps,

and escalate complex issues when needed.""",

description="Handles technical support issues."

)

billing_agent = ConversableAgent(

name="billing_agent",

system_message="""You are a billing support specialist.

Help customers with billing questions, payment issues,

and account-related inquiries.""",

description="Handles billing and account issues."

)

supervisor = ConversableAgent(

name="supervisor",

system_message="""You are a customer service supervisor.

Oversee the support process and make final decisions.

Say 'RESOLVED' when the issue is handled.""",

is_termination_msg=lambda x: "RESOLVED" in (x.get("content", "") or "").upper(),

)

# Create group chat for customer service team

service_chat = GroupChat(

agents=[supervisor, triage_agent, technical_agent, billing_agent],

speaker_selection_method="auto",

messages=[],

)

service_manager = GroupChatManager(

name="service_manager",

groupchat=service_chat,

llm_config=llm_config,

)

# Simulate customer inquiry

result = supervisor.initiate_chat(

recipient=service_manager,

message="""Customer inquiry: "Hi, I'm having trouble logging into my account.

I keep getting an error message that says 'Invalid credentials' even though

I'm sure my password is correct. Can you help me?"

""",

)

return result

Example 6: Research and Analysis Workflow

def example_6_research_workflow():

"""

Example 6: Research and Analysis Workflow

This example shows how to build a research and analysis system.

"""

print("=== Example 6: Research and Analysis Workflow ===")

llm_config = LLMConfig(api_type="openai", model="gpt-4o-mini")

with llm_config:

# Create research team agents

data_collector = ConversableAgent(

name="data_collector",

system_message="""You are a data collection specialist.

Identify relevant data sources and information for research topics.""",

description="Collects and organizes research data."

)

analyst = ConversableAgent(

name="analyst",

system_message="""You are a data analyst.

Analyze collected information and identify key insights and patterns.""",

description="Analyzes data and identifies insights."

)

report_writer = ConversableAgent(

name="report_writer",

system_message="""You are a report writer.

Create clear, structured reports based on analysis results.""",

description="Creates structured research reports."

)

research_lead = ConversableAgent(

name="research_lead",

system_message="""You are the research team lead.

Coordinate the research process and ensure quality.

Say 'RESEARCH_COMPLETE' when satisfied with the results.""",

is_termination_msg=lambda x: "RESEARCH_COMPLETE" in (x.get("content", "") or "").upper(),

)

# Create research group

research_group = GroupChat(

agents=[research_lead, data_collector, analyst, report_writer],

speaker_selection_method="auto",

messages=[],

)

research_manager = GroupChatManager(

name="research_manager",

groupchat=research_group,

llm_config=llm_config,

)

# Start research project

result = research_lead.initiate_chat(

recipient=research_manager,

message="""Let's conduct research on the current trends in multi-agent AI systems.

Focus on recent developments, key applications, and future directions.""",

)

return result

Main execution function

def main():

"""

Main function to run all examples.

Note: Make sure to set your OPENAI_API_KEY environment variable

before running these examples.

"""

# Check for API key

if not os.getenv("OPENAI_API_KEY"):

print("Error: Please set your OPENAI_API_KEY environment variable")

print("Example: export OPENAI_API_KEY='your-api-key-here'")

return

print("AG2 Practical Examples")

print("=====================")

print()

examples = [

("Basic Two-Agent Conversation", example_1_basic_conversation),

("Human-in-the-Loop Workflow", example_2_human_in_loop),

("Group Chat with Multiple Agents", example_3_group_chat),

("Tool Integration", example_4_tool_integration),

("Customer Service Automation", example_5_customer_service),

("Research and Analysis Workflow", example_6_research_workflow),

]

print("Available examples:")

for i, (name, _) in enumerate(examples, 1):

print(f"{i}. {name}")

print()

choice = input("Enter example number to run (1-6), or 'all' to run all examples: ")

if choice.lower() == 'all':

for name, func in examples:

print(f"\n{'='*50}")

print(f"Running: {name}")

print('='*50)

try:

func()

print(f"✓ {name} completed successfully")

except Exception as e:

print(f"✗ {name} failed: {str(e)}")

else:

try:

example_num = int(choice)

if 1 <= example_num <= len(examples):

name, func = examples[example_num - 1]

print(f"\nRunning: {name}")

func()

print(f"✓ {name} completed successfully")

else:

print("Invalid example number")

except ValueError:

print("Invalid input. Please enter a number or 'all'")

except Exception as e:

print(f"Error running example: {str(e)}")

if name == "main":

main()

Comments